data_path = Path('../img_data/')

imgs_path = data_path/'images'

parq_file = data_path/'data.parquet'

img_size = 512

channels = 1

batch_size = 4Data

SLDataModule

SLDataModule (parq_file, data_path='', train_tfms=None, test_tfms=None, train_size=0.8, valid_size=0.2, test_size=0.1, img_size=512, channels=3, batch_size=64, num_workers=6, pin_memory=False, calc_stats=False, stats_img_size=224, stats_file='img_stats.pkl', seed=42, **kwargs)

DataModule for single label classification.

SLDataset

SLDataset (data, data_path='', tfms=None, img_idx=0, label_idx=1, channels=3, class_names=None, **kwargs)

Dataset for single label classification.

StatsDataset

StatsDataset (data, data_path='', img_idx=0, tfms=None, channels=3, **kwargs)

Dataset for calculating the mean and std of a dataset.

get_data_stats

get_data_stats (df, data_path='', img_idx=0, img_size=224, channels=3, stats_percentage=0.7, bs=32, num_workers=4, device=None)

Calculates the mean and std of a dataset.

split_df

split_df (train_df, test_size=0.15, stratify_idx=1)

Usage

# CALCULATE STATS

train_tfms = create_transform(img_size, color_jitter=None, hflip=0.5, vflip=0.5, scale=(0.8,1.0),

is_training=True, mean=[1,2,3], std=[4,5,6])

test_tfms = create_transform(img_size, mean=[1,2,3], std=[4,5,6])

dm = SLDataModule(parq_file, data_path=data_path, img_size=img_size, batch_size=batch_size,

train_tfms=train_tfms, test_tfms=test_tfms, channels=channels, num_workers=6, calc_stats=True)

dm.prepare_data()

dm.train_tfmsGlobal seed set to 42Calculating dataset mean and std. This may take a while.

Mean loop:

Batch: 1/1

Std loop:

Batch: 1/1

Done.Compose(

RandomResizedCropAndInterpolation(size=(512, 512), scale=(0.8, 1.0), ratio=(0.75, 1.3333), interpolation=bilinear)

RandomHorizontalFlip(p=0.5)

RandomVerticalFlip(p=0.5)

ToTensor()

Normalize(mean=tensor([0.7367]), std=tensor([0.4174]))

)dm.setup()tb = next_batch(dm.train_dataloader())

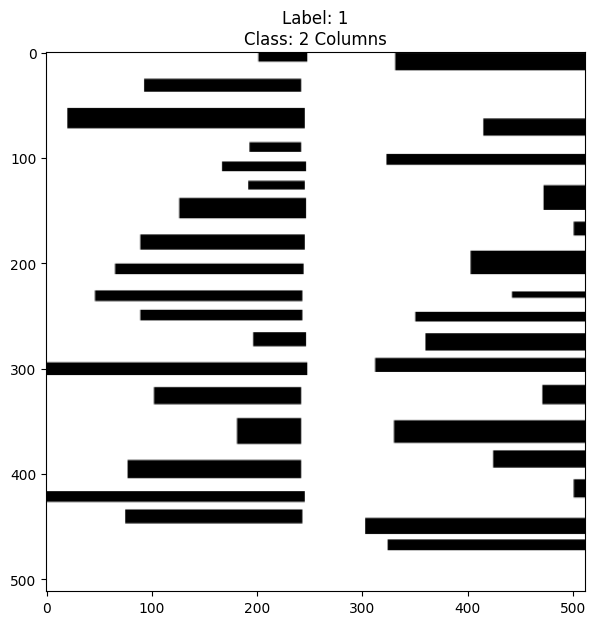

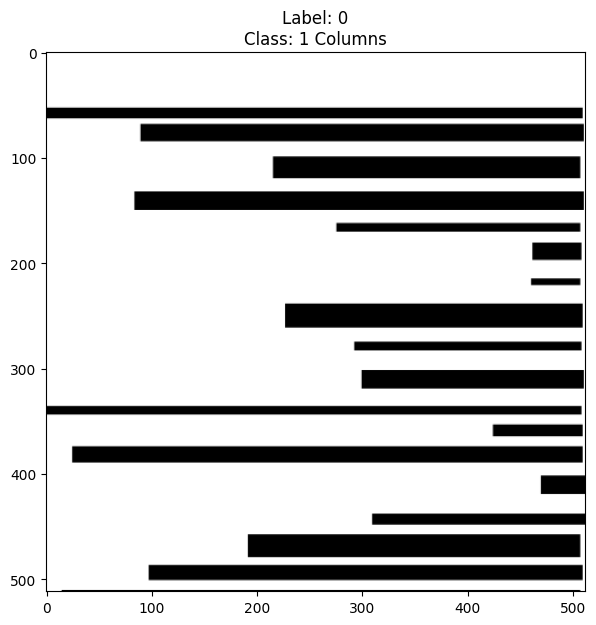

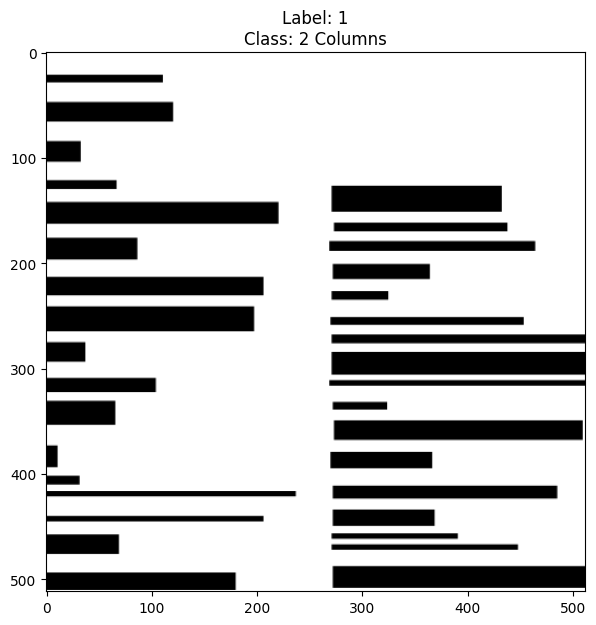

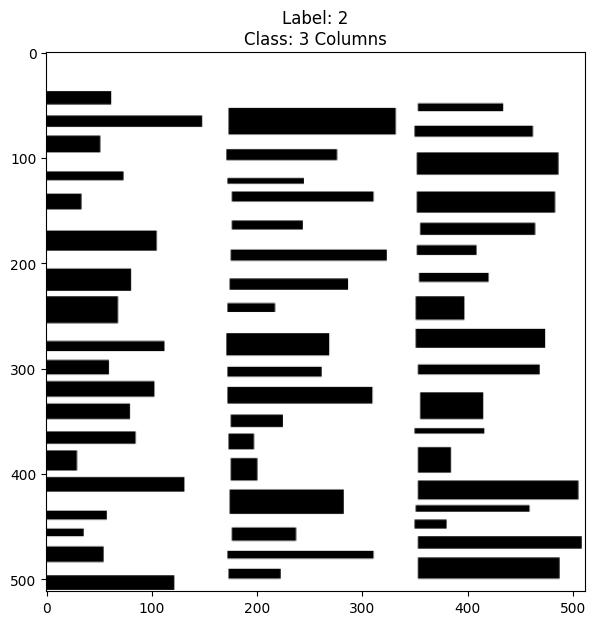

img = tb['image'][0]tb['image'].shape, img.shape(torch.Size([4, 1, 512, 512]), torch.Size([1, 512, 512]))preds = [f'Label: {l}\nClass: {dm.idx_to_class[int(l)]} Columns' for l in tb['label']]

preds['Label: 1\nClass: 2 Columns',

'Label: 0\nClass: 1 Columns',

'Label: 1\nClass: 2 Columns',

'Label: 2\nClass: 3 Columns']show_img([img for img in tb['image']], cmap='gray', titles=preds)